In the digital era, AI-driven chatbots have become ubiquitous, transforming how we interact with information and automate processes across various sectors. From customer service to content creation, chatbots, powered by advancements in large language model (LLM) technology, are reshaping the interactive landscape. However, the rapid integration of these tools into daily operations comes with its own set of challenges, particularly concerning the accuracy and reliability of the information they generate. This blog explores the concept of “botshit”—the misleading or inaccurate content produced by chatbots—and offers strategies to manage these knowledge risks effectively.

“Botshit” refers to instances where chatbots, despite their sophisticated algorithms, generate coherent yet factually incorrect or misleading content. This phenomenon arises because chatbots do not truly “understand” the data they process; rather, they predict responses based on patterns identified in their training data. Consequently, this can lead to what is known as “hallucinations,” or responses that, while sounding plausible, are entirely fabricated or distorted.

The risks associated with botshit are not trivial. They can range from minor inaccuracies that confuse major errors that could potentially lead to financial loss, reputational damage, or even legal challenges. As such, identifying and mitigating the epistemic risks of chatbot interactions is crucial for organizations that rely on these technologies for delivering critical information and services.

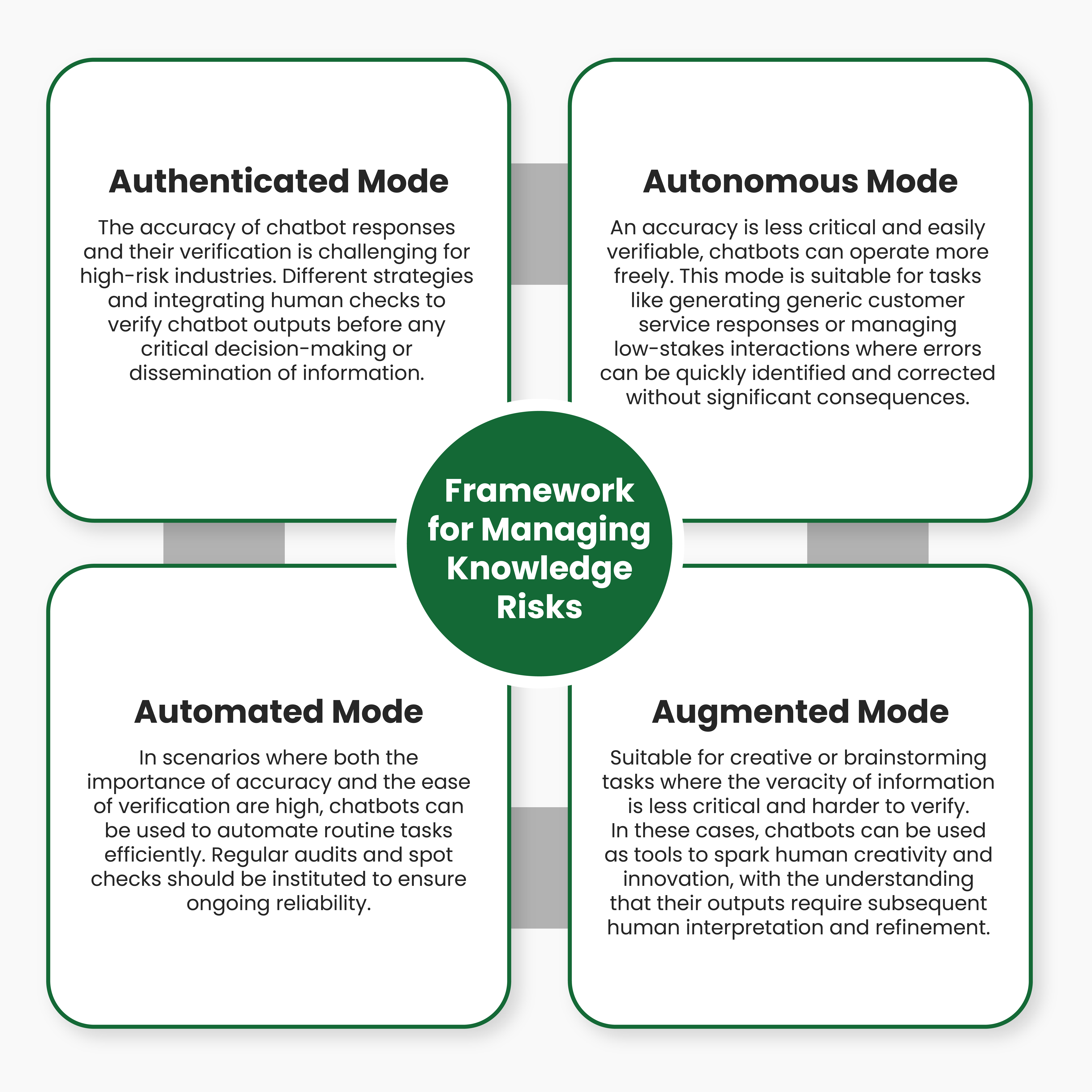

To effectively manage the risks posed by AI chatbots, organizations can adopt a structured framework that categorizes chatbot usage based on the importance of response accuracy and the feasibility of verifying these responses. Here’s how organizations can navigate these risks across different scenarios:

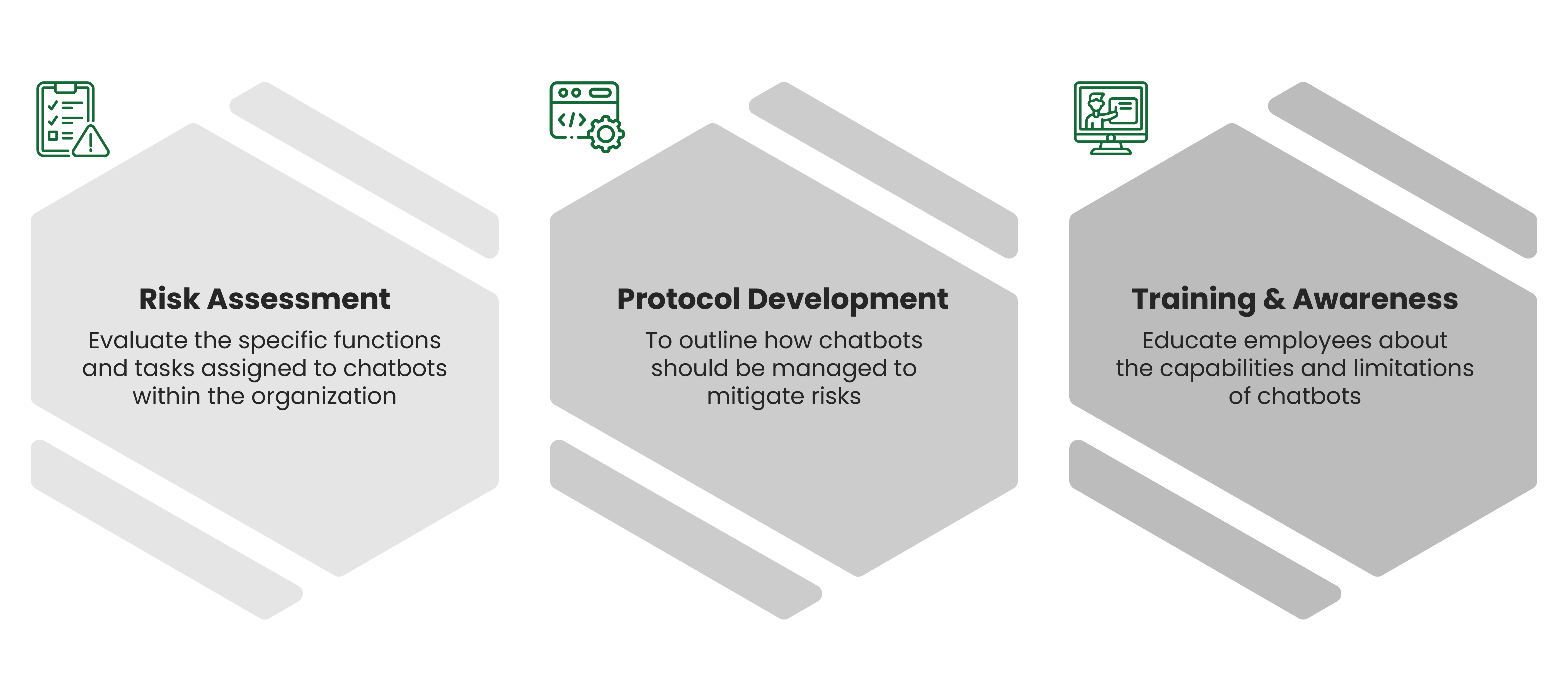

Implementing the Framework

Deploying this framework involves several practical steps:

In addition to the strategic framework, establishing both technological and organizational guardrails is essential to safeguard against the misuse of AI-generated content:

Technological Guardrails: Implement advanced data verification tools, enhance the transparency of chatbot decision-making processes, and ensure that AI models undergo regular updates and audits to improve their accuracy and reliability.

Organizational Guardrails: Develop clear policies and guidelines that dictate the acceptable use of chatbots. These policies should address ethical considerations, data security, and the alignment of chatbot deployment with overall organizational objectives and values.

Generative AI like ChatGPT and Claude represent a powerful form of intelligence augmentation that can greatly enhance human productivity and creativity. But like any tool, they have limitations that must be clearly understood and mitigated against when appropriate.

By taking a thoughtful approach that combines the speed and assistance of AI with human judgment, oversight, and domain expertise, we can harness the incredible benefits of language models while managing the inherent knowledge risks and gaps in their training.

The most effective uses of generative AI will involve symbiotic collaboration between humans and machines, with each complementing the other’s strengths. Humans provide contextual reasoning, real-world validation of knowledge, and guidance for the AI’s knowledge acquisition. In turn, the AI provides a multiplier on human intelligence by rapidly generating ideas, analysis, and facilitating tasks.

As AI technology continues to evolve, so too will the strategies to mitigate its associated risks. Continued research and development will be critical in refining AI models to reduce errors and enhance their understanding of context and nuance. Furthermore, as regulatory frameworks around AI usage mature, organizations will need to stay informed and compliant with new guidelines and standards.

AI chatbots offer significant benefits, from enhancing operational efficiency to enriching customer engagement. However, managing the knowledge risks associated with their use is crucial to avoid the pitfalls of botshit. By implementing a structured risk management framework and establishing robust guardrails, organizations can harness the power of AI chatbots responsibly and effectively, ensuring that these tools augment rather than undermine the integrity of their operations and decision-making processes.

As these models continue to evolve and learn, the human-AI partnership will become an increasingly powerful combination for solving problems and expanding our collective knowledge and capabilities.